Published by Dan Cunning on Jan 17, 2020

Tracking your AWS costs

Filed under Features, Web Services

The Problem

Amazon Web Services (AWS) offers an enormous amount of products, with reasonable pricing for a hobbiest (pay for what you use) or business (save on sysadmin wages). However, their monthly bill doesn't have any details: just the service, the charges, the taxes, and the total.

I wanted more granular look in my morning update email, and here's how I got it done.

Capturing the data

Amazon's Creating an AWS Cost and Usage Report guide offers a step-by-step breakdown to follow. I used "include resource IDs" so I can see costs of specific EC2 servers. I also selected "Enable report data integration for Amazon Athena" since it's a database that only charges when you query it.

You have to wait a day or so for Amazon to writing some billing data to your S3 bucket, then proceed to the next step.

Reading the schema

Now we turn to the aptly named AWS Glue to crawl our S3 bucket's new billing data and write its database schema to AWS Athena.

I configured the crawler to "Run Monthly" on the 1st, and allowed Glue to create a new IAM role with permissions to do the work it needs to do. All-in-all the crawler cost me $0.02 to run once. Refer to the previously noted Amazon guide‘s "Configuring Athena data integration" section for more details here.

Querying the costs

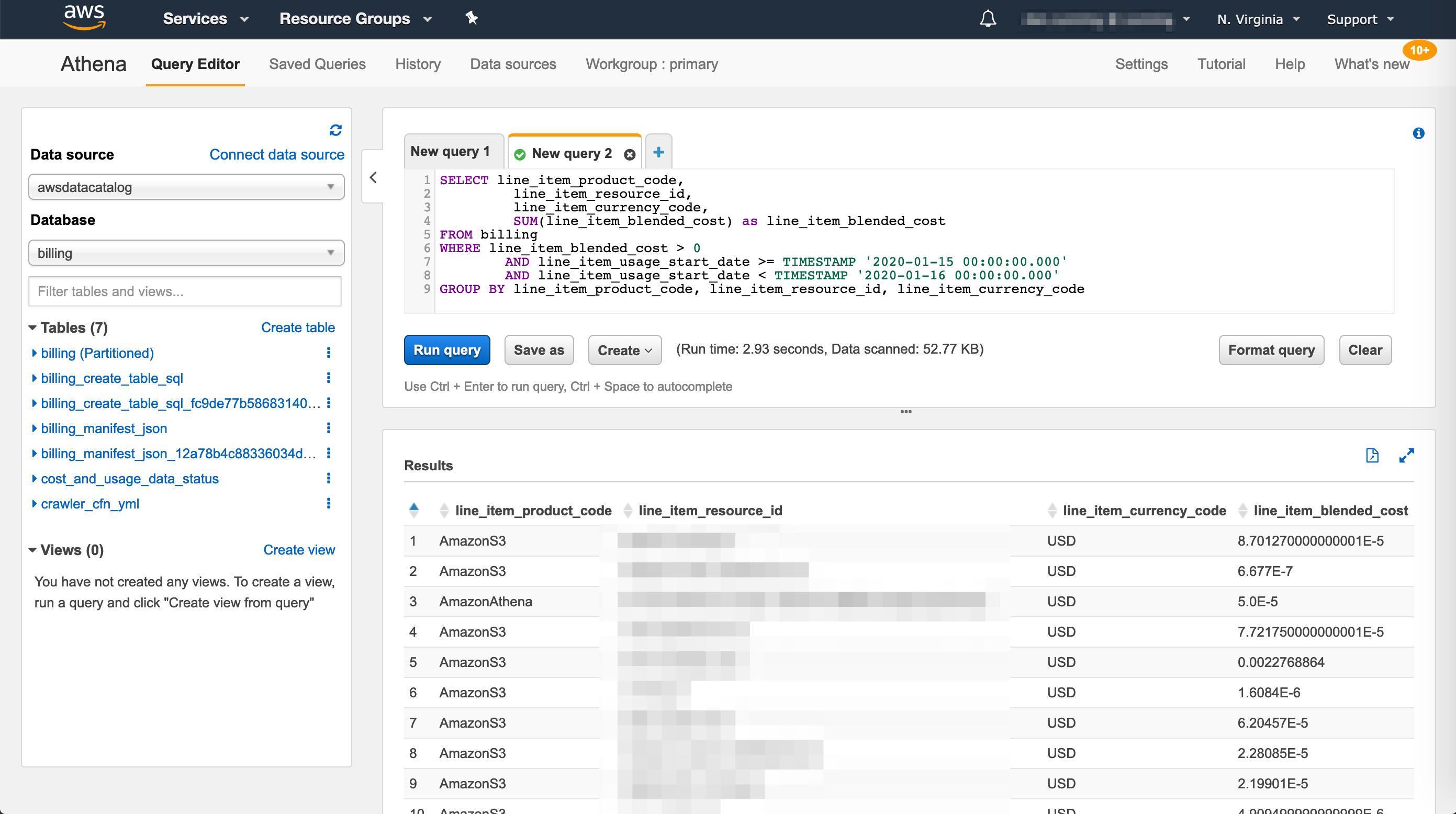

After the Glue crawler finishes, we're ready to start querying. Visit AWS Athena and you'll see a new database and its tables.

Play around with different queries inside Athena's UI. Here's what I use to query daily costs by resource:

SELECT line_item_product_code,

line_item_resource_id,

line_item_currency_code,

SUM(line_item_blended_cost) as line_item_blended_cost

FROM billing

WHERE line_item_blended_cost > 0

AND line_item_usage_start_date >= TIMESTAMP '2020-01-15 00:00:00.000'

AND line_item_usage_start_date < TIMESTAMP '2020-01-16 00:00:00.000'

GROUP BY line_item_product_code, line_item_resource_id, line_item_currency_code

Adding costs to the email

Every morning my system prepares a report with important information from the previous day: active users, support statistics, and application health. I'm excited to add "application cost" to that list! Here's the relevant code:

class GatherAwsCosts < ApplicationService

attr_reader :start_response

attr_reader :wait_response

attr_reader :finish_response

attr_reader :csv

attr_reader :costs

def initialize(date, bucket_name, wait_delay: 1.second)

super

@date = date

@bucket_name = bucket_name

@wait_delay = wait_delay

end

def call

start_query_execution

wait_for_query_to_finish

download_results

process_results

end

private

def start_query_execution

@start_response = athena.start_query_execution(

query_string: sql_statement,

query_execution_context: {

database: "billing",

},

result_configuration: {

output_location: "s3://#{@bucket_name}/billing/results/",

},

work_group: "primary",

)

end

def wait_for_query_to_finish

loop do

sleep @wait_delay

Rails.logger.info "waiting for Athena query to finish"

@wait_response = athena.get_query_execution(

query_execution_id: @start_response.query_execution_id,

)

status = @wait_response.query_execution.status

case status.state

when "SUCCEEDED"

@finish_response = @wait_response

break

when "QUEUED", "RUNNING"

# continue waiting

else

raise "Unhandled query execution state: #{status.state.inspect} (#{status.state_change_reason})"

end

end

end

def download_results

bucket = s3.bucket(@bucket_name)

obj_url = @finish_response.query_execution.result_configuration.output_location

obj_key = obj_url.split("/")[3..].join("/") # remove s3://bucket_name

s3_object = bucket.object(obj_key)

@csv = s3_object.get.body.read

end

def process_results

@costs = []

CSV.parse(@csv, headers: true) do |row|

cost = row["line_item_blended_cost"].to_f

next if cost < 0.01 # ignore costs that are less than a penny

@costs << OpenStruct.new(

product: row["line_item_product_code"],

resource: row["line_item_resource_id"],

cost: cost.round(2),

currency: row["line_item_currency_code"],

)

end

@costs

end

def aws_credentials

{

access_key_id: Rails.application.credentials.dig(:aws, :access_key_id),

secret_access_key: Rails.application.credentials.dig(:aws, :secret_access_key),

region: Rails.application.credentials.dig(:aws, :region),

}

end

def s3

@s3 ||= Aws::S3::Resource.new(aws_credentials)

end

def athena

@athena ||= Aws::Athena::Client.new(aws_credentials)

end

def sql_statement

from = @date.to_s

to = (@date + 1.day).to_s

%(

SELECT line_item_product_code,

line_item_resource_id,

line_item_currency_code,

SUM(line_item_blended_cost) as line_item_blended_cost

FROM billing

WHERE line_item_blended_cost > 0

AND line_item_usage_start_date >= TIMESTAMP '#{from} 00:00:00.000'

AND line_item_usage_start_date < TIMESTAMP '#{to} 00:00:00.000'

GROUP BY line_item_product_code, line_item_resource_id, line_item_currency_code;

)

end

end

# app/mailers/reports_mailer.rb

class ReportsMailer < ApplicationMailer

def morning_report

@date = Time.zone.yesterday

@aws_costs = GatherAwsCosts.call(@date, "your_bucket")

end

end

<!-- app/views/reports_mailer/morning_report.html.erb -->

<table>

<thead>

<tr>

<th>Product</th>

<th>Resource</th>

<th>Cost</th>

</tr>

</thead>

<tbody>

<% @aws_costs.each do |line_item| %>

<tr>

<td><%= line_item.product %></td>

<td><%= line_item.resource %></td>

<td><%= line_item.cost %> <%= line_item.currency %></td>

</tr>

<% end %>

</tbody>

<thead>

<tr>

<th>Total</th>

<th></th>

<th><%= @aws_costs.sum(:cost) %> <%= @aws_costs.map(&:currency).uniq.join(' ') %></th>

</tr>

</thead>

</table>

Wrap-Up

Now every morning I get a complete list of the previous day's AWS expenses. Eventually I may drop it back to every week or two, but I'm not tired of studying the fluctuations yet. Here's some ideas for future work:

- Replace my EC2 instace resource IDs with its name via #describe_instances

- Graph the per day costs in a monthly report

- Turn this idea into a reuseable library

I'm a Ruby on Rails contractor from Atlanta GA, focusing on simplicity and usability through solid design. Read more »